── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.5

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ ggplot2 3.5.1 ✔ tibble 3.2.1

✔ lubridate 1.9.3 ✔ tidyr 1.3.1

✔ purrr 1.0.2

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors── Attaching packages ────────────────────────────────────── tidymodels 1.2.0 ──

✔ broom 1.0.5 ✔ rsample 1.2.1

✔ dials 1.3.0 ✔ tune 1.2.1

✔ infer 1.0.7 ✔ workflows 1.1.4

✔ modeldata 1.3.0 ✔ workflowsets 1.1.0

✔ parsnip 1.2.1 ✔ yardstick 1.3.1

✔ recipes 1.1.0

── Conflicts ───────────────────────────────────────── tidymodels_conflicts() ──

✖ scales::discard() masks purrr::discard()

✖ dplyr::filter() masks stats::filter()

✖ recipes::fixed() masks stringr::fixed()

✖ dplyr::lag() masks stats::lag()

✖ yardstick::spec() masks readr::spec()

✖ recipes::step() masks stats::step()

• Dig deeper into tidy modeling with R at https://www.tmwr.org

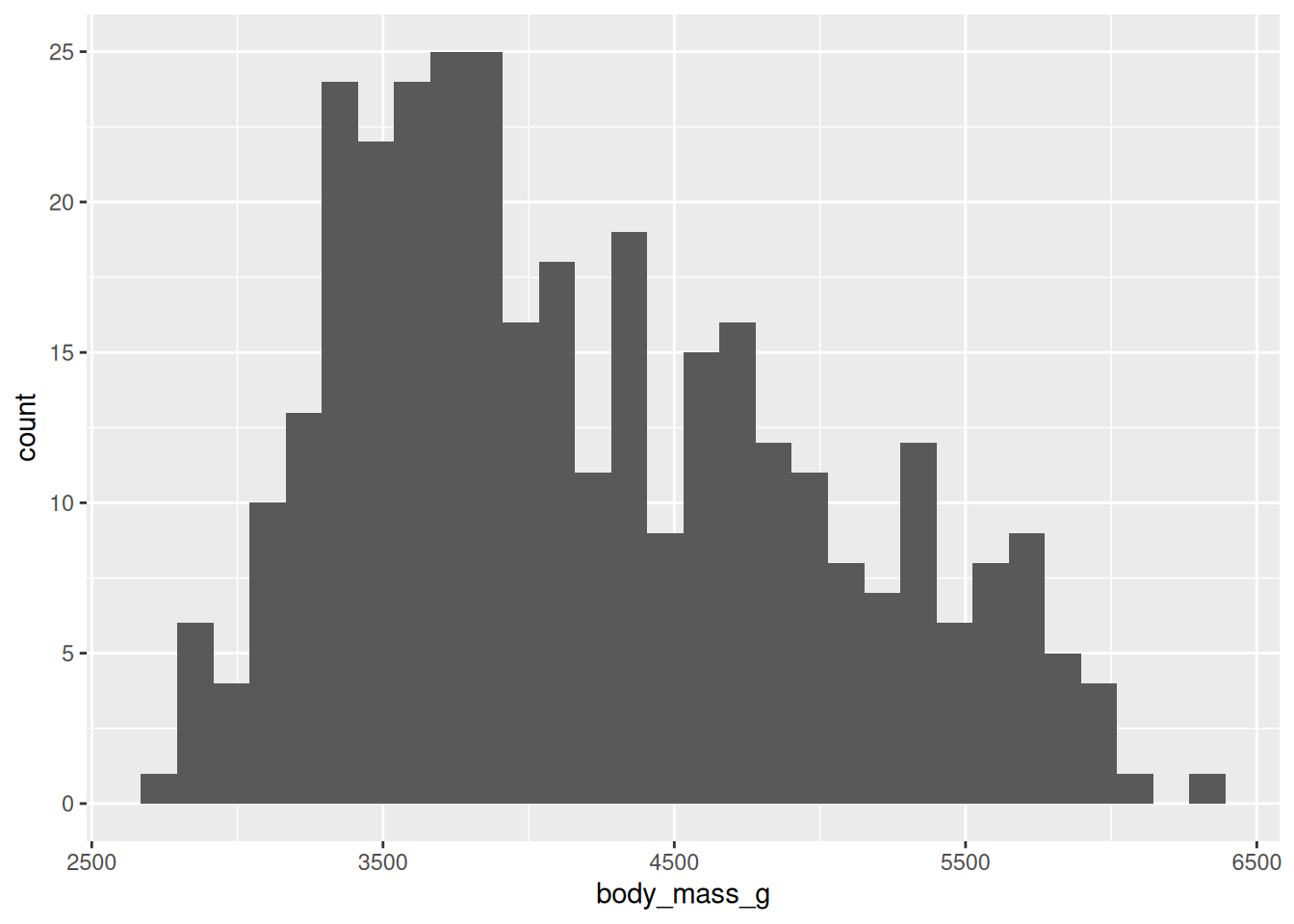

Attaching package: 'palmerpenguins'

The following object is masked from 'package:modeldata':

penguins